What should be configured in Cortex XDR to integrate asset data from Microsoft Azure for better visibility and incident investigation?

Azure Network Watcher

Cloud Identity Engine

Cloud Inventory

Microsoft 365

Cortex XDR supports integration with cloud platforms like Microsoft Azure to ingest asset data, improving visibility into cloud-based assets and enhancing incident investigation by correlating cloud events with endpoint and network data. TheCloud Inventoryfeature in Cortex XDR is designed to collect and manage asset data from cloud providers, including Azure, providing details such as virtual machines, storage accounts, and network configurations.

Correct Answer Analysis (C):Cloud Inventoryshould be configured to integrate asset data from Microsoft Azure. This feature allows Cortex XDR to pull in metadata about Azure assets, such as compute instances, networking resources, and configurations, enabling better visibility and correlation during incident investigations. Administrators configure Cloud Inventory by connecting to Azure via API credentials (e.g., using an Azure service principal) to sync asset data into Cortex XDR.

Why not the other options?

A. Azure Network Watcher: Azure Network Watcher is a Microsoft Azure service for monitoring and diagnosing network issues, but it is not directly integrated with Cortex XDR for asset data ingestion.

B. Cloud Identity Engine: The Cloud Identity Engine integrates with identity providers (e.g., Azure AD) to sync user and group data for identity-based threat detection, not for general asset data like VMs or storage.

D. Microsoft 365: Microsoft 365 integration in Cortex XDR is for ingesting email and productivity suite data (e.g., from Exchange or Teams), not for Azure asset data.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains cloud integrations: “Cloud Inventory integrates with Microsoft Azure to collect asset data, enhancing visibility and incident investigation byproviding details on cloud resources” (paraphrased from the Cloud Inventory section). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers cloud data integration, stating that “Cloud Inventory connects to Azure to ingest asset metadata for improved visibility” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “data ingestion and integration” as a key exam topic, encompassing Cloud Inventory setup.

Log events from a previously deployed Windows XDR Collector agent are no longer being observed in the console after an OS upgrade. Which aspect of the log events is the probable cause of this behavior?

They are greater than 5MB

They are in Winlogbeat format

They are in Filebeat format

They are less than 1MB

TheXDR Collectoron a Windows endpoint collects logs (e.g., Windows Event Logs) and forwards them to the Cortex XDR console for analysis. An OS upgrade can impact the collector’s functionality, particularly if it affects log formats, sizes, or compatibility. If log events are no longer observed after the upgrade, the issue likely relates to a change in how logs are processed or transmitted. Cortex XDR imposes limits on log event sizes to ensure efficient ingestion and processing.

Correct Answer Analysis (A):The probable cause is thatthe log events are greater than 5MB. Cortex XDR has a size limit for individual log events, typically around 5MB, to prevent performance issues during ingestion. An OS upgrade may change the way logs are generated (e.g., increasing verbosity or adding metadata), causing events to exceed this limit. If log events are larger than 5MB, the XDR Collector will drop them, resulting in no logs being observed in the console.

Why not the other options?

B. They are in Winlogbeat format: Winlogbeat is a supported log shipper for collecting Windows Event Logs, and the XDR Collector is compatible with this format. The format itself is not the issue unless misconfigured, which is not indicated.

C. They are in Filebeat format: Filebeat is also supported by the XDR Collector for file-based logs. The format is not the likely cause unless the OS upgrade changed the log source, which is not specified.

D. They are less than 1MB: There is no minimum size limit for log events in Cortex XDR, so being less than 1MB would not cause logs to stop appearing.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains log ingestion limits: “Individual log events larger than 5MB are dropped by the XDR Collector to prevent ingestion issues, which may occur after changes like an OS upgrade” (paraphrased from the XDR Collector Troubleshooting section). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers log collection issues, stating that “log events exceeding 5MB are not ingested, a common issue after OS upgrades thatincrease log size” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “maintenance and troubleshooting” as a key exam topic, encompassing log ingestion issues.

Which XQL query can be saved as a behavioral indicator of compromise (BIOC) rule, then converted to a custom prevention rule?

dataset = xdr_data

| filter event_type = ENUM.DEVICE and action_process_image_name = "**"

and action_process_image_command_line = "-e cmd*"

and action_process_image_command_line != "*cmd.exe -a /c*"

dataset = xdr_data

| filter event_type = ENUM.PROCESS and event_type = ENUM.DEVICE and action_process_image_name = "**"

and action_process_image_command_line = "-e cmd*"

and action_process_image_command_line != "*cmd.exe -a /c*"

dataset = xdr_data

| filter event_type = FILE and (event_sub_type = FILE_CREATE_NEW or event_sub_type = FILE_WRITE or event_sub_type = FILE_REMOVE or event_sub_type = FILE_RENAME) and agent_hostname = "hostname"

| filter lowercase(action_file_path) in ("/etc/*", "/usr/local/share/*", "/usr/share/*") and action_file_extension in ("conf", "txt")

| fields action_file_name, action_file_path, action_file_type, agent_ip_a

dataset = xdr_data

| filter event_type = ENUM.PROCESS and action_process_image_name = "**"

and action_process_image_command_line = "-e cmd*"

and action_process_image_command_line != "*cmd.exe -a /c*"

In Cortex XDR, aBehavioral Indicator of Compromise (BIOC)rule defines a specific pattern of endpoint behavior (e.g., process execution, file operations, or network activity) that can trigger an alert. BIOCs are often created usingXQL (XDR Query Language)queries, which are then saved as BIOC rules to monitor for the specified behavior. To convert a BIOC into acustom prevention rule, the BIOC must be associated with aRestriction profile, which allows the defined behavior to be blocked rather than just detected. For a query to be suitable as a BIOC and convertible to a prevention rule, it must meet the following criteria:

It must monitor a behavior that Cortex XDR can detect on an endpoint, such as process execution, file operations, or device events.

The behavior must be actionable for prevention (e.g., blocking a process or file operation), typically involving events like process launches (ENUM.PROCESS) or file modifications (ENUM.FILE).

The query should not include overly complex logic (e.g., multiple event types with conflicting conditions) that cannot be translated into a BIOC rule.

Let’s analyze each query to determine which one meets these criteria:

Option A: dataset = xdr_data | filter event_type = ENUM.DEVICE ...This query filters for event_type = ENUM.DEVICE, which relates to device-related events (e.g., USB device connections). While device events can be monitored, the additional conditions (action_process_image_name = "**" and action_process_image_command_line) are process-related attributes, which are typically associated with ENUM.PROCESS events, not ENUM.DEVICE. This mismatch makes the query invalid for a BIOC, as it combines incompatible event types and attributes. Additionally, device events are not typically used for custom prevention rules, as prevention rules focus on blocking processes or fileoperations, not device activities.

Option B: dataset = xdr_data | filter event_type = ENUM.PROCESS and event_type = ENUM.DEVICE ...This query attempts to filter for events that are both ENUM.PROCESS and ENUM.DEVICE (event_type = ENUM.PROCESS and event_type = ENUM.DEVICE), which is logically incorrect because an event cannot have two different event types simultaneously. In XQL, the event_type field must match a single type (e.g., ENUM.PROCESS or ENUM.DEVICE), and combining them with an and operator results in no matches. This makes the query invalid for creating a BIOC rule, as it will not return any results and cannot be used for detection or prevention.

Option C: dataset = xdr_data | filter event_type = FILE ...This query monitors file-related events (event_type = FILE) with specific sub-types (FILE_CREATE_NEW, FILE_WRITE, FILE_REMOVE, FILE_RENAME) on a specific hostname, targeting file paths (/etc/*, /usr/local/share/*, /usr/share/*) and extensions (conf, txt). While this query can be saved as a BIOC to detect file operations, it is not ideal for conversion to a custom prevention rule. Cortex XDR prevention rules typically focus on blocking process executions (via Restriction profiles), not file operations. While file-based BIOCs can generate alerts, converting them to prevention rules is less common, as Cortex XDR’s prevention mechanisms are primarily process-oriented (e.g., terminating a process), not file-oriented (e.g., blocking a file write). Additionally, the query includes complex logic (e.g., multiple sub-types, lowercase() function, fields clause), which may not fully translate to a prevention rule.

Option D: dataset = xdr_data | filter event_type = ENUM.PROCESS ...This query monitors process execution events (event_type = ENUM.PROCESS) where the process image name matches a pattern (action_process_image_name = "**"), the command line includes -e cmd*, and excludes commands matching *cmd.exe -a /c*. This query is well-suited for a BIOC rule, as it defines a specific process behavior (e.g., a process executing with certain command-line arguments) that Cortex XDR can detect on an endpoint. Additionally, this type of BIOC can be converted to a custom prevention rule by associating it with aRestriction profile, which can block the process execution if the conditions are met. For example, the BIOC can be configured to detect processes with action_process_image_name = "**" and action_process_image_command_line = "-e cmd*", and a Restriction profile can terminate such processes to prevent the behavior.

Correct Answer Analysis (D):

Option D is the correct choice because it defines a process-based behavior (ENUM.PROCESS) that can be saved as a BIOC rule to detect the specified activity (processes with certain command-line arguments). It can then be converted to a custom prevention rule by adding it to a Restriction profile, which will block the process execution when the conditions are met. The query’s conditions are straightforward and compatible with Cortex XDR’s BIOC and prevention framework, making it the best fit for the requirement.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains BIOC and prevention rules: “XQL queries monitoring process events (ENUM.PROCESS) can be saved as BIOC rules to detect specific behaviors, and these BIOCs can be added to a Restriction profile to create custom prevention rules that block the behavior” (paraphrased from the BIOC and Restriction Profile sections). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers BIOC creation, stating that “process-based XQL queries are ideal for BIOCs and can be converted to prevention rules via Restriction profiles to block executions” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “detection engineering” as a key exam topic, encompassing BIOC rule creation and conversion to prevention rules.

How can a Malware profile be configured to prevent a specific executable from being uploaded to the cloud?

Disable on-demand file examination for the executable

Set PE and DLL examination for the executable to report action mode

Add the executable to the allow list for executions

Create an exclusion rule for the executable

In Cortex XDR,Malware profilesdefine how the agent handles files for analysis, including whether they are uploaded to the cloud forWildFireanalysis or other cloud-based inspections. To prevent a specific executable from being uploaded to the cloud, the administrator can configure anexclusion rulein the Malware profile. Exclusion rules allow specific files, directories, or patterns to be excluded from cloud analysis, ensuring they are not sent to the cloud while still allowing local analysis or other policy enforcement.

Correct Answer Analysis (D):Creating anexclusion rulefor the executable in the Malware profile ensures that the specified file is not uploaded to the cloud for analysis. This can be done by specifying the file’s name, hash, or path in the exclusion settings, preventing unnecessary cloud uploads while maintaining agent functionality for other files.

Why not the other options?

A. Disable on-demand file examination for the executable: Disabling on-demand file examination prevents the agent from analyzing the file at all, which could compromise security by bypassing local and cloud analysis entirely. This is not the intended solution.

B. Set PE and DLL examination for the executable to report action mode: Setting examination to “report action mode” configures the agent to log actions without blocking or uploading, but it does not specifically prevent cloud uploads. This option is unrelated to controlling cloud analysis.

C. Add the executable to the allow list for executions: Adding an executable to the allow list permits it to run without triggering prevention actions, but it does not prevent the file from being uploaded to the cloud for analysis.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains Malware profile configuration: “Exclusion rules in Malware profiles allow administrators to specify files or directories that are excluded from cloud analysis, preventing uploads to WildFire or other cloud services” (paraphrased from the Malware Profile Configuration section). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers agent configuration, stating that “exclusion rules can be used to prevent specific files from being sent to the cloud for analysis” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “Cortex XDR agent configuration” as a key exam topic, encompassing Malware profile settings.

A security audit determines that the Windows Cortex XDR host-based firewall is not blocking outbound RDP connections for certain remote workers. The audit report confirms the following:

All devices are running healthy Cortex XDR agents.

A single host-based firewall rule to block all outbound RDP is implemented.

The policy hosting the profile containing the rule applies to all Windows endpoints.

The logic within the firewall rule is adequate.

Further testing concludes RDP is successfully being blocked on all devices tested at company HQ.

Network location configuration in Agent Settings is enabled on all Windows endpoints.What is the likely reason the RDP connections are not being blocked?

The profile's default action for outbound traffic is set to Allow

The pertinent host-based firewall rule group is only applied to external rule groups

Report mode is set to Enabled in the report settings under the profile configuration

The pertinent host-based firewall rule group is only applied to internal rule groups

Cortex XDR’shost-based firewallfeature allows administrators to define rules to control network traffic on endpoints, such as blocking outbound Remote Desktop Protocol (RDP) connections (typically on TCP port 3389). The firewall rules are organized intorule groups, which can be applied based on the endpoint’snetwork location(e.g., internal or external). Thenetwork location configurationin Agent Settings determines whether an endpoint is considered internal (e.g., on the company network at HQ) or external (e.g., remote workers on a public network). The audit confirms that a rule to block outbound RDP exists, the rule logic is correct, and it works at HQ but not for remote workers.

Correct Answer Analysis (D):The likely reason RDP connections are not being blocked for remote workers is thatthe pertinent host-based firewall rule group is only applied to internal rule groups. Since network location configuration is enabled, Cortex XDR distinguishes between internal (e.g., HQ) and external (e.g., remote workers) networks. If the firewall rule group containing the RDP block rule is applied only tointernal rule groups, it will only take effect for endpoints at HQ (internal network), as confirmed by the audit. Remote workers, on an external network, would not be subject to this rule group, allowing their outbound RDP connections to proceed.

Why not the other options?

A. The profile's default action for outbound traffic is set to Allow: While a default action of Allow could permit traffic not matched by a rule, the audit confirms the RDP block rule’s logic is adequate and works at HQ. This suggests the rule is being applied correctly for internal endpoints, but not for external ones, pointing to a rule group scoping issue rather than the default action.

B. The pertinent host-based firewall rule group is only applied to external rule groups: If the rule group were applied only to external rule groups, remote workers (on external networks) would have RDP blocked, but the audit shows the opposite—RDP is blocked at HQ (internal) but not for remote workers.

C. Report mode is set to Enabled in the report settings under the profile configuration: If report mode were enabled, the firewall rule would only log RDP traffic without blocking it, but this would affect all endpoints (both HQ and remote workers). The audit shows RDP is blocked at HQ, so report mode is not enabled.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains host-based firewall configuration: “Firewall rule groups can be applied to internal or external network locations, as determined by the network location configuration in Agent Settings. Rules applied to internal rule groups will not affect endpoints on external networks” (paraphrased from the Host-Based Firewall section). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers firewall rules, stating that “network location settings determine whether a rule group applies to internal or external endpoints, impacting rule enforcement” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “Cortex XDR agent configuration” as a key exam topic, encompassing host-based firewall settings.

A query is created that will run weekly via API. After it is tested and ready, it is reviewed in the Query Center. Which available column should be checked to determine how many compute units will be used when the query is run?

Query Status

Compute Unit Usage

Simulated Compute Units

Compute Unit Quota

In Cortex XDR, theQuery Centerallows administrators to manage and reviewXQL (XDR Query Language)queries, including those scheduled to run via API. Each query consumescompute units, a measure of the computational resources required to execute the query. To determine how many compute units a query will use, theCompute Unit Usagecolumn in the Query Center provides the actual or estimated resource consumption based on the query’s execution history or configuration.

Correct Answer Analysis (B):TheCompute Unit Usagecolumn in the Query Center displays the number of compute units consumed by a query when it runs. For a tested and ready query, this column provides the most accurate information on resource usage, helping administrators plan for API-based executions.

Why not the other options?

A. Query Status: The Query Status column indicates whether the query ran successfully, failed, or is pending, but it does not provide information on compute unit consumption.

C. Simulated Compute Units: While some systems may offer simulated estimates, Cortex XDR’s Query Center does not have a “Simulated Compute Units” column. The actual usage is tracked in Compute Unit Usage.

D. Compute Unit Quota: The Compute Unit Quota refers to the total available compute units for the tenant, not the specific usage of an individual query.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains Query Center functionality: “The Compute Unit Usage column in the Query Center shows the compute units consumed by a query, enabling administrators to assess resource usage for scheduled or API-based queries” (paraphrased from the Query Center section). TheEDU-262: Cortex XDR Investigation and Responsecourse covers query management, stating that “Compute Unit Usage provides details on the resources used by each query in the Query Center” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “maintenance and troubleshooting” as a key exam topic, encompassing query resource management.

An administrator wants to employ reusable rules within custom parsing rules to apply consistent log field extraction across multiple data sources. Which section of the parsing rule should the administrator use to define those reusable rules in Cortex XDR?

RULE

INGEST

FILTER

CONST

In Cortex XDR, parsing rules are used to extract and normalize fields from log data ingested from various sources to ensure consistent analysis and correlation. To create reusable rules for consistent log field extraction across multiple data sources, administrators use theCONSTsection within the parsing rule configuration. TheCONSTsection allows the definition of reusable constants or rules that can be applied across different parsing rules, ensuring uniformity in how fields are extracted and processed.

TheCONSTsection is specifically designed to hold constant values or reusable expressions that can be referenced in other parts of the parsing rule, such as theRULEorINGESTsections. This is particularly useful when multiple data sources require similar field extraction logic, as it reduces redundancy and ensures consistency. For example, a constant regex pattern for extracting IP addresses can be defined in theCONSTsection and reused across multiple parsing rules.

Why not the other options?

RULE: TheRULEsection defines the specific logic for parsing and extracting fields from a log entry but is not inherently reusable across multiple rules unless referenced via constants defined inCONST.

INGEST: TheINGESTsection specifies how raw log data is ingested and preprocessed, not where reusable rules are defined.

FILTER: TheFILTERsection is used to include or exclude log entries based on conditions, not for defining reusable extraction rules.

Exact Extract or Reference:

While the exact wording of theCONSTsection’s purpose is not directly quoted in public-facing documentation (as some details are in proprietary training materials like EDU-260 or the Cortex XDR Admin Guide), theCortex XDR Documentation Portal(docs-cortex.paloaltonetworks.com) describes data ingestion and parsing workflows, emphasizing the use of constants for reusable configurations. TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers data onboarding and parsing, noting that “constants defined in the CONST section allow reusable parsing logic for consistent field extraction across sources” (paraphrased from course objectives). Additionally, thePalo Alto Networks Certified XDR Engineer datasheetlists “data source onboarding and integration configuration” as a key skill, which includes mastering parsing rules and their components likeCONST.

In addition to using valid authentication credentials, what is required to enable the setup of the Database Collector applet on the Broker VM to ingest database activity?

Valid SQL query targeting the desired data

Access to the database audit log

Database schema exported in the correct format

Access to the database transaction log

TheDatabase Collector appleton the Broker VM in Cortex XDR is used to ingest database activity logs by querying the database directly. To set up the applet, valid authentication credentials (e.g., username and password) are required to connect to the database. Additionally, avalid SQL querymust be provided to specify the data to be collected, such as specific tables, columns, or events (e.g., login activity or data modifications).

Correct Answer Analysis (A):Avalid SQL query targeting the desired datais required to configure the Database Collector applet. The query defines which database records or events are retrieved and sent to Cortex XDR for analysis. This ensures the applet collects only the relevant data, optimizing ingestion and analysis.

Why not the other options?

B. Access to the database audit log: While audit logs may contain relevant activity, the Database Collector applet queries the database directly using SQL, not by accessing audit logs. Audit logs are typically ingested via other methods, such as Filebeat or syslog.

C. Database schema exported in the correct format: The Database Collector does not require an exported schema. The SQL query defines the data structure implicitly, and Cortex XDR maps the queried data to its schema during ingestion.

D. Access to the database transaction log: Transaction logs are used for database recovery or replication, not for direct data collection by the Database Collector applet, which relies on SQL queries.

Exact Extract or Reference:

TheCortex XDR Documentation Portaldescribes the Database Collector applet: “To configure the Database Collector, provide valid authentication credentials and a valid SQL query to retrieve the desired database activity” (paraphrased from the Broker VM Applets section). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers data ingestion, stating that “the Database Collector applet requires a SQL query to specify the data to ingest from the database” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “data ingestion and integration” as a key exam topic, encompassing Database Collector configuration.

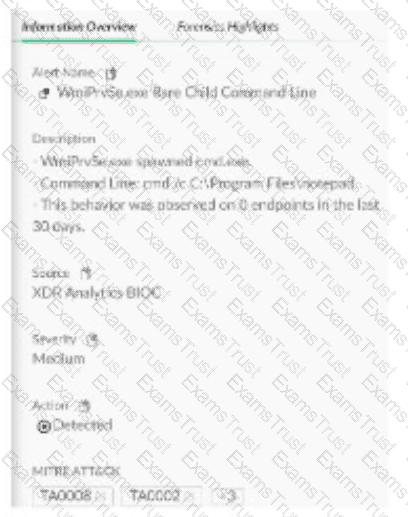

Based on the image of a validated false positive alert below, which action is recommended for resolution?

Create an alert exclusion for OUTLOOK.EXE

Disable an action to the CGO Process DWWIN.EXE

Create an exception for the CGO DWWIN.EXE for ROP Mitigation Module

Create an exception for OUTLOOK.EXE for ROP Mitigation Module

In Cortex XDR, a false positive alert involvingOUTLOOK.EXEtriggering aCGO (Codegen Operation)alert related toDWWIN.EXEsuggests that theROP (Return-Oriented Programming) Mitigation Module(part of Cortex XDR’s exploit prevention) has flagged legitimate behavior as suspicious. ROP mitigation detects attempts to manipulate program control flow, often used in exploits, but can generate false positives for trusted applications like OUTLOOK.EXE. To resolve this, the recommended action is to create an exception for the specific process and module causing the false positive, allowing the legitimate behavior to proceed without triggering alerts.

Correct Answer Analysis (D):Create an exception for OUTLOOK.EXE for ROP Mitigation Moduleis the recommended action. Since OUTLOOK.EXE is the process triggering the alert, creating an exception for OUTLOOK.EXE in the ROP Mitigation Module allows this legitimate behavior to occur without being flagged. This is done by adding OUTLOOK.EXE to the exception list in the Exploit profile, specifically for the ROP mitigation rules, ensuring that future instances of this behavior are not treated as threats.

Why not the other options?

A. Create an alert exclusion for OUTLOOK.EXE: While an alert exclusion can suppress alerts for OUTLOOK.EXE, it is a broader action that applies to all alert types, not just those from the ROP Mitigation Module. This could suppress other legitimate alerts for OUTLOOK.EXE, reducing visibility into potential threats. An exception in the ROP Mitigation Module is more targeted.

B. Disable an action to the CGO Process DWWIN.EXE: Disabling actions for DWWIN.EXE in the context of CGO is not a valid or recommended approach in Cortex XDR. DWWIN.EXE (Dr. Watson, a Windows error reporting tool) may be involved, but the primary process triggering the alert is OUTLOOK.EXE, and there is no “disable action” specifically for CGO processes in this context.

C. Create an exception for the CGO DWWIN.EXE for ROP Mitigation Module: While DWWIN.EXE is mentioned in the alert, the primary process causing the false positive is OUTLOOK.EXE, as it’s the application initiating the behavior. Creating an exception for DWWIN.EXE would not address the root cause, as OUTLOOK.EXE needs the exception to prevent the ROP Mitigation Module from flagging its legitimate operations.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains false positive resolution: “To resolve false positives in the ROP Mitigation Module, create an exception for the specific process (e.g., OUTLOOK.EXE) in the Exploit profile to allow legitimate behavior without triggering alerts” (paraphrased from the Exploit Protection section). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers exploit prevention tuning, stating that “exceptions for processes like OUTLOOK.EXE in the ROP Mitigation Module prevent false positives while maintaining protection” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “detection engineering” as a key exam topic, encompassing false positive resolution.

An engineer wants to automate the handling of alerts in Cortex XDR and defines several automation rules with different actions to be triggered based on specific alert conditions. Some alerts do not trigger the automation rules as expected. Which statement explains why the automation rules might not apply to certain alerts?

They are executed in sequential order, so alerts may not trigger the correct actions if the rules are not configured properly

They only apply to new alerts grouped into incidents by the system and only alerts that generateincidents trigger automation actions

They can only be triggered by alerts with high severity; alerts with low or informational severity will not trigger the automation rules

They can be applied to any alert, but they only work if the alert is manually grouped into an incident by the analyst

In Cortex XDR,automation rules(also known as response actions or playbooks) are used to automate alert handling based on specific conditions, such as alert type, severity, or source. These rules are executed in a defined order, and the first rule that matches an alert’s conditions triggers its associated actions. If automation rules are not triggering as expected, the issue often lies in their configuration or execution order.

Correct Answer Analysis (A):Automation rules areexecuted in sequential order, and each alert is evaluated against the rules in the order they are defined. If the rules are not configured properly (e.g., overly broad conditions in an earlier rule or incorrect prioritization), an alert may match an earlier rule and trigger its actions instead of the intended rule, or it may not match any rule due to misconfigured conditions. This explains why some alerts do not trigger the expected automation rules.

Why not the other options?

B. They only apply to new alerts grouped into incidents by the system and only alerts that generate incidents trigger automation actions: Automation rules can apply to both standalone alerts and those grouped into incidents. They are not limited to incident-related alerts.

C. They can only be triggered by alerts with high severity; alerts with low or informational severity will not trigger the automation rules: Automation rules can be configured to trigger based on any severity level (high, medium, low, or informational), so this is not a restriction.

D. They can be applied to any alert, but they only work if the alert is manually grouped into an incident by the analyst: Automation rules do not require manual incident grouping; they can apply to any alert based on defined conditions, regardless of incident status.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains automation rules: “Automation rules are executed in sequential order, and the first rule matching an alert’s conditions triggers its actions. Misconfigured rules or incorrect ordering can prevent expected actions from being applied” (paraphrased from the Automation Rules section). TheEDU-262: Cortex XDR Investigation and Responsecourse covers automation, stating that “sequential execution of automation rules requires careful configuration to ensure the correct actions are triggered” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “playbook creation and automation” as a key exam topic, encompassing automation rule configuration.

An analyst considers an alert with the category of lateral movement to be allowed and not needing to be checked in the future. Based on the image below, which action can an engineer take to address the requirement?

Create a behavioral indicator of compromise (BIOC) suppression rule for the parent process and the specific BIOC: Lateral movement

Create an alert exclusion rule by using the alert source and alert name

Create a disable injection and prevention rule for the parent process indicated in the alert

Create an exception rule for the parent process and the exact command indicated in the alert

In Cortex XDR, alateral movementalert (mapped to MITRE ATT&CK T1021, e.g., Remote Services) indicates potential unauthorized network activity, often involving processes like cmd.exe. If the analyst determines this behavior is allowed (e.g., a legitimate use of cmd /c dir for administrative purposes) and should not be flagged in the future, the engineer needs to suppress future alerts for this specific behavior. The most effective way to achieve this is by creating analert exclusion rule, which suppresses alerts based on specific criteria such as the alert source (e.g., Cortex XDR analytics) and alert name (e.g., "Lateral Movement Detected").

Correct Answer Analysis (B):Create an alert exclusion rule by using the alert source and alert nameis the recommended action. This approach directly addresses the requirement by suppressing future alerts of the same type (lateral movement) from the specified source, ensuring that this legitimate activity (e.g., cmd /c dir by cmd.exe) does not generate alerts. Alert exclusions can be fine-tuned to apply to specific endpoints, users, or other attributes, making this a targeted solution.

Why not the other options?

A. Create a behavioral indicator of compromise (BIOC) suppression rule for the parent process and the specific BIOC: Lateral movement: While BIOC suppression rules can suppress specific BIOCs, the alert in question appears to be generated by Cortex XDR analytics (not a custom BIOC), as indicated by the MITRE ATT&CK mapping and alert category. BIOC suppression is more relevant for custom BIOC rules, not analytics-driven alerts.

C. Create a disable injection and prevention rule for the parent process indicated in the alert: There is no “disable injection and prevention rule” in CortexXDR, and this option does not align with the goal of suppressing alerts. Injection prevention is related to exploit protection, not lateral movement alerts.

D. Create an exception rule for the parent process and the exact command indicated in the alert: While creating an exception for the parent process (cmd.exe) and command (cmd /c dir) might prevent some detections, it is not the most direct method for suppressing analytics-driven lateral movement alerts. Exceptions are typically used for exploit or malware profiles, not for analytics-based alerts.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains alert suppression: “To prevent future checks for allowed alerts, create an alert exclusion rule using the alert source and alert name to suppress specific alert types” (paraphrased from the Alert Management section). TheEDU-262: Cortex XDR Investigation and Responsecourse covers alert tuning, stating that “alert exclusion rules based on source and name are effective for suppressing analytics-driven alerts like lateral movement” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “detection engineering” as a key exam topic, encompassing alert suppression techniques.

After deploying Cortex XDR agents to a large group of endpoints, some of the endpoints have a partially protected status. In which two places can insights into what is contributing to this status be located? (Choose two.)

Management Audit Logs

XQL query of the endpoints dataset

All Endpoints page

Asset Inventory

In Cortex XDR, apartially protected statusfor an endpoint indicates that some agent components or protection modules (e.g., malware protection, exploit prevention) are not fully operational, possibly due to compatibility issues, missing prerequisites, or configuration errors. To troubleshoot this status, engineers need to identify the specific components or issues affecting the endpoint, which can be done by examining detailed endpoint data and status information.

Correct Answer Analysis (B, C):

B. XQL query of the endpoints dataset: AnXQL (XDR Query Language)query against the endpoints dataset (e.g., dataset = endpoints | filter endpoint_status = "PARTIALLY_PROTECTED" | fields endpoint_name, protection_status_details) provides detailed insights into the reasons for the partially protected status. The endpoints dataset includes fields like protection_status_details, which specify which modules are not functioning and why.

C. All Endpoints page: TheAll Endpoints pagein the Cortex XDR console displays a list of all endpoints with their statuses, including those that are partially protected. Clicking into an endpoint’s details reveals specific information about the protection status, such as which modules are disabled or encountering issues, helping identify the cause of the status.

Why not the other options?

A. Management Audit Logs: Management Audit Logs track administrative actions (e.g., policy changes, agent installations), but they do not provide detailed insights into the endpoint’s protection status or the reasons for partial protection.

D. Asset Inventory: Asset Inventory provides an overview of assets (e.g., hardware, software) but does not specifically detail the protection status of Cortex XDR agents or the reasons for partial protection.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains troubleshooting partially protected endpoints:“Use the All Endpoints page to view detailed protection status, and run an XQL query against the endpoints dataset to identify specific issues contributing to a partially protected status” (paraphrased from the Endpoint Management section). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers endpoint troubleshooting, stating that “the All Endpoints page and XQL queries of the endpoints dataset provide insights into partial protection issues” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “maintenance and troubleshooting” as a key exam topic, encompassing endpoint status investigation.

Which method will drop undesired logs and reduce the amount of data being ingested?

[COLLECT:vendor="vendor", product="product", target_brokers="", no_hit=drop] * drop _raw_log contains "undesired logs";

[INGEST:vendor="vendor", product="product", target_dataset="vendor_product_raw",no_hit=drop] * filter _raw_log not contains "undesired logs";

[COLLECT:vendor="vendor", product="product", target_dataset="", no_hit=drop] * drop _raw_log contains "undesired logs";

[INGEST:vendor="vendor", product="product", target_brokers="vendor_product_raw", no_hit=keep] * filter _raw_log not contains "undesired logs";

In Cortex XDR, managing data ingestion involves defining rules to collect, filter, or drop logs to optimize storage and processing. The goal is todrop undesired logsto reduce the amount of data ingested. The syntax used in the options appears to be a combination of ingestion rule metadata (e.g., [COLLECT] or [INGEST]) and filtering logic, likely written in a simplified query language for log processing. Thedropaction explicitly discards logs matching a condition, whilefilterwithnot containscan achieve similar results by keeping only logs that do not match the condition.

Correct Answer Analysis (C):The method in option C,[COLLECT:vendor="vendor", product="product", target_dataset="", no_hit=drop] * drop _raw_log contains "undesired logs";, explicitlydropslogs where the raw log content contains "undesired logs". The [COLLECT] directive defines the log collection scope (vendor, product, and dataset), and the no_hit=drop parameter indicates that unmatched logs are dropped. The drop _raw_log contains "undesired logs" statement ensures that logs matching the "undesired logs" pattern are discarded, effectively reducing the amount of data ingested.

Why not the other options?

A. [COLLECT:vendor="vendor", product="product", target_brokers="", no_hit=drop] * drop _raw_log contains "undesired logs";: This is similar to option C but uses target_brokers="", which is typically used for Broker VM configurations rather than direct dataset ingestion. While it could work, option C is more straightforward with target_dataset="".

B. [INGEST:vendor="vendor", product="product", target_dataset="vendor_product_raw", no_hit=drop] * filter _raw_log not contains "undesired logs";: This method uses filter _raw_log not contains "undesired logs" to keep logs that do not match the condition, which indirectly drops undesired logs. However, the drop action in option C is more explicit and efficient for reducing ingestion.

D. [INGEST:vendor="vendor", product="product", target_brokers="vendor_product_raw", no_hit=keep] * filter _raw_log not contains "undesired logs";: The no_hit=keep parameter means unmatched logs are kept, which does not align with the goal of reducing data. The filter statement reduces data, but no_hit=keep may counteract this by retaining unmatched logs, making this less effective than option C.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains log ingestion rules: “To reduce data ingestion, use the drop action to discard logs matching specific patterns, such as _raw_log contains 'pattern'” (paraphrased from the Data Ingestion section). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers data ingestion optimization, stating that “dropping logs with specific content using drop _raw_log contains is an effective way to reduce ingested data volume” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “data ingestion and integration” as a key exam topic, encompassing log filtering and dropping.

Some company employees are able to print documents when working from home, but not on network-attached printers, while others are able to print only to file. What can be inferred about the affected users’ inability to print?

They may be attached to the default extensions policy and profile

They may have a host firewall profile set to block activity to all network-attached printers

They may have different disk encryption profiles that are not allowing print jobs on encrypted files

They may be on different device extensions profiles set to block different print jobs

In Cortex XDR, printing issues can be influenced by agent configurations, particularly those related to network access or device control. The scenario describes two groups of employees: one group can print when working from home but not on network-attached printers, and another can only print to file (e.g., PDF or XPS). This suggests a restriction on network printing, likely due to a security policy enforced by the Cortex XDR agent.

Correct Answer Analysis (B):They may have a host firewall profile set to block activity to all network-attached printersis the most likely inference. Cortex XDR’shost firewallfeature allows administrators to define rules that control network traffic, including blocking outbound connections to network-attached printers (e.g., by blocking protocols like IPP or LPD on specific ports). Employees working from home (on external networks) may be subject to a firewall profile that blocks network printing to prevent data leakage, while local printing (e.g., to USB printers) or printing to file is allowed. The group that can only print to file likely has stricter rules that block all physical printing, allowing only virtual print-to-file operations.

Why not the other options?

A. They may be attached to the default extensions policy and profile: The default extensions policy typically does not include specific restrictions on printing, focusing instead on general agent behavior (e.g., device control or exploit protection). Printing issues are more likely tied to firewall or device control profiles.

C. They may have different disk encryption profiles that are not allowing print jobs on encrypted files: Cortex XDR does not manage disk encryption profiles, and disk encryption (e.g., BitLocker) does not typically block printing based on file encryption status. This is not a relevant cause.

D. They may be on different device extensions profiles set to block different print jobs: While device control profiles can block USB printers, they do not typically control network printing or distinguish between print-to-file and physical printing. Network printing restrictions are more likely enforced by host firewall rules.

Exact Extract or Reference:

TheCortex XDR Documentation Portalexplains host firewall capabilities: “Host firewall profiles can block outbound traffic to network-attached printers, restricting printing for remote employees to prevent unauthorized data transfers” (paraphrased from the Host-Based Firewall section). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers firewall configurations, stating that “firewall rules can block network printing while allowing local or virtual printing, often causing printing issues for remote users” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “Cortex XDR agent configuration” as a key exam topic, encompassing host firewall settings.

A cloud administrator reports high network bandwidth costs attributed to Cortex XDR operations and asks for bandwidth usage to be optimized without compromising agent functionality. Which two techniques should the engineer implement? (Choose two.)

Configure P2P download sources for agent upgrades and content updates

Enable minor content version updates

Enable agent content management bandwidth control

Deploy a Broker VM and activate the local agent settings applet

Cortex XDR agents communicate with the cloud for tasks like receiving content updates, agent upgrades, and sending telemetry data, which can consume significant network bandwidth. To optimize bandwidth usage without compromising agent functionality, the engineer should implement techniques that reduce network traffic while maintaining full detection, prevention, and response capabilities.

Correct Answer Analysis (A, C):

A. Configure P2P download sources for agent upgrades and content updates: Peer-to-Peer (P2P) download sources allow Cortex XDR agents to share content updates and agent upgrades with other agents on the same network, reducing the need for each agent to download data directly from the cloud. This significantly lowers bandwidth usage, especially in environments with many endpoints.

C. Enable agent content management bandwidth control: Cortex XDR provides bandwidth control settings in theContent Managementconfiguration, allowing administrators to limit the bandwidth used for content updates and agent communications. This feature throttles data transfers to minimize network impact while ensuring updates are still delivered.

Why not the other options?

B. Enable minor content version updates: Enabling minor content version updates ensures agents receive incremental updates, but this alone does not significantly optimize bandwidth, as it does not address the volume or frequency of data transfers. It is a standard practice but not a primary bandwidth optimization technique.

D. Deploy a Broker VM and activate the local agent settings applet: A Broker VM can act as a local proxy for agent communications, potentially reducing cloud traffic, but thelocal agent settings appletis used for configuring agent settings locally, not for bandwidth optimization. Additionally, deploying a Broker VM requires significant setup and may not directly address bandwidth for content updates or upgrades compared to P2P or bandwidth control.

Exact Extract or Reference:

TheCortex XDR Documentation Portaldescribes bandwidth optimization: “P2P download sources enable agents to share content updates and upgrades locally, reducing cloud bandwidth usage” and “Content Management bandwidth control allows administrators to limit the network impact of agent updates” (paraphrased from the Agent Management and Content Updates sections). TheEDU-260: Cortex XDR Prevention and Deploymentcourse covers post-deployment optimization, stating that “P2P downloads and bandwidth control settings are key techniques for minimizing network usage” (paraphrased from course materials). ThePalo Alto Networks Certified XDR Engineer datasheetincludes “post-deployment management and configuration” as a key exam topic, encompassing bandwidth optimization.

Copyright © 2014-2025 Examstrust. All Rights Reserved