HOTSPOT

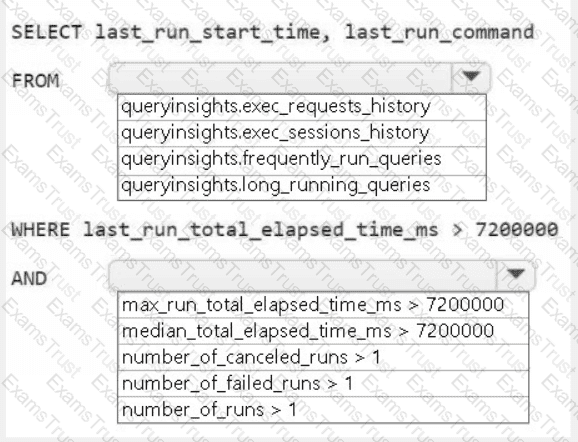

You need to troubleshoot the ad-hoc query issue.

How should you complete the statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to resolve the sales data issue. The solution must minimize the amount of data transferred.

What should you do?

You need to implement the solution for the book reviews.

Which should you do?

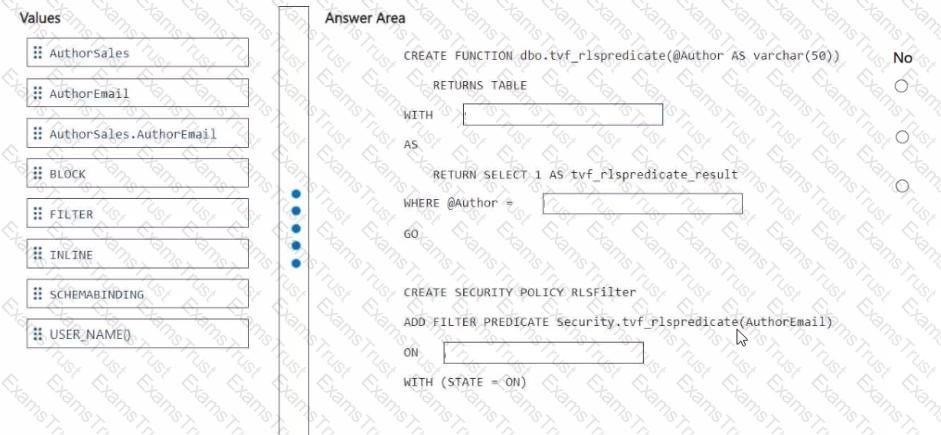

You need to ensure that the authors can see only their respective sales data.

How should you complete the statement? To answer, drag the appropriate values the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content

NOTE: Each correct selection is worth one point.

You need to create a workflow for the new book cover images.

Which two components should you include in the workflow? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

You need to ensure that processes for the bronze and silver layers run in isolation How should you configure the Apache Spark settings?

What should you do to optimize the query experience for the business users?

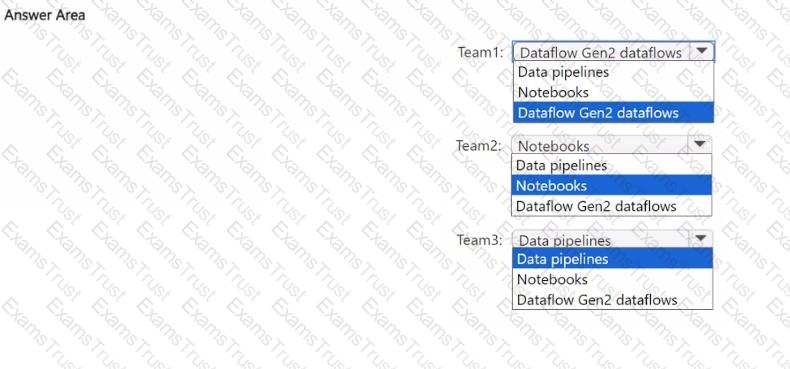

Your company has three newly created data engineering teams named Team1, Team2, and Team3 that plan to use Fabric. The teams have the following personas:

• Team1 consists of members who currently use Microsoft Power BI. The team wants to transform data by using by a low-code approach.

• Team2 consists of members that have a background in Python programming. The team wants to use PySpark code to transform data.

• Team3 consists of members who currently use Azure Data Factory. The team wants to move data between source and sink environments by using the least amount of effort.

You need to recommend tools for the teams based on their current personas.

What should you recommend for each team? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

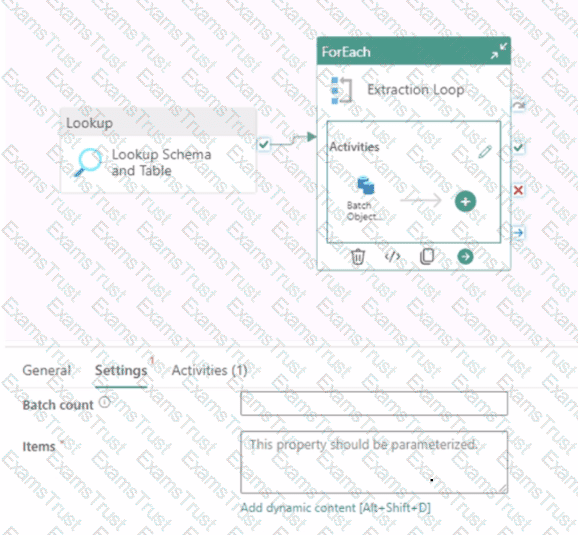

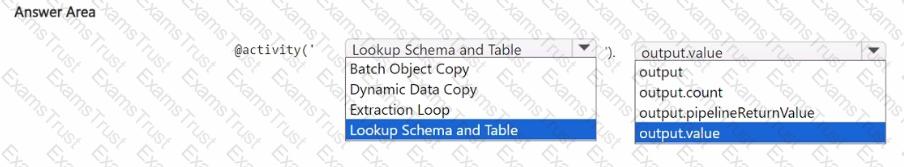

You are building a data orchestration pattern by using a Fabric data pipeline named Dynamic Data Copy as shown in the exhibit. (Click the Exhibit tab.)

Dynamic Data Copy does NOT use parametrization.

You need to configure the ForEach activity to receive the list of tables to be copied.

How should you complete the pipeline expression? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You have an Azure event hub. Each event contains the following fields:

BikepointID

Street

Neighbourhood

Latitude

Longitude

No_Bikes

No_Empty_Docks

You need to ingest the events. The solution must only retain events that have a Neighbourhood value of Chelsea, and then store the retained events in a Fabric lakehouse.

What should you use?

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

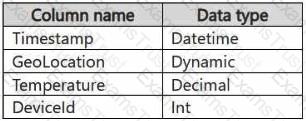

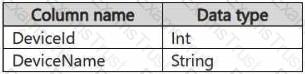

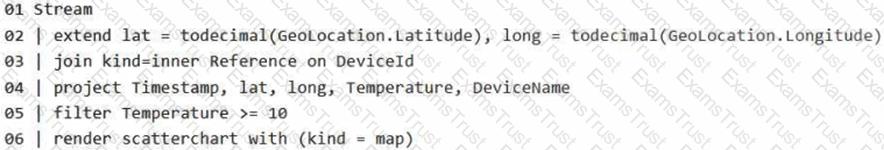

You have a KQL database that contains two tables named Stream and Reference. Stream contains streaming data in the following format.

Reference contains reference data in the following format.

Both tables contain millions of rows.

You have the following KQL queryset.

You need to reduce how long it takes to run the KQL queryset.

Solution: You add the make_list() function to the output columns.

Does this meet the goal?

You need to recommend a solution for handling old files. The solution must meet the technical requirements. What should you include in the recommendation?

You need to populate the MAR1 data in the bronze layer.

Which two types of activities should you include in the pipeline? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

You need to recommend a solution to resolve the MAR1 connectivity issues. The solution must minimize development effort. What should you recommend?

You need to ensure that the data analysts can access the gold layer lakehouse.

What should you do?

You need to schedule the population of the medallion layers to meet the technical requirements.

What should you do?

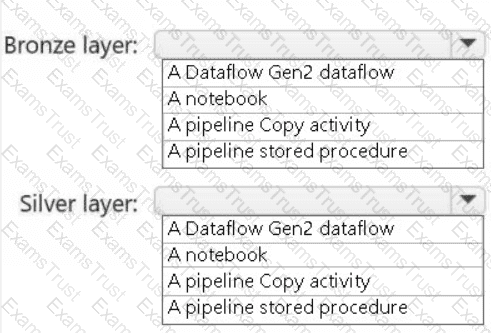

You need to recommend a method to populate the POS1 data to the lakehouse medallion layers.

What should you recommend for each layer? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to ensure that usage of the data in the Amazon S3 bucket meets the technical requirements.

What should you do?

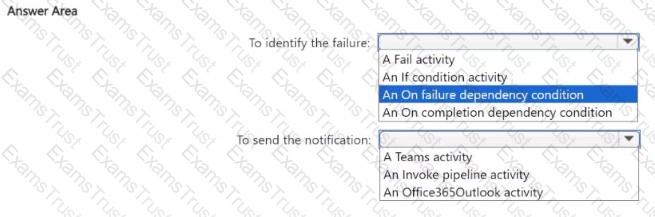

You need to ensure that the data engineers are notified if any step in populating the lakehouses fails. The solution must meet the technical requirements and minimize development effort.

What should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

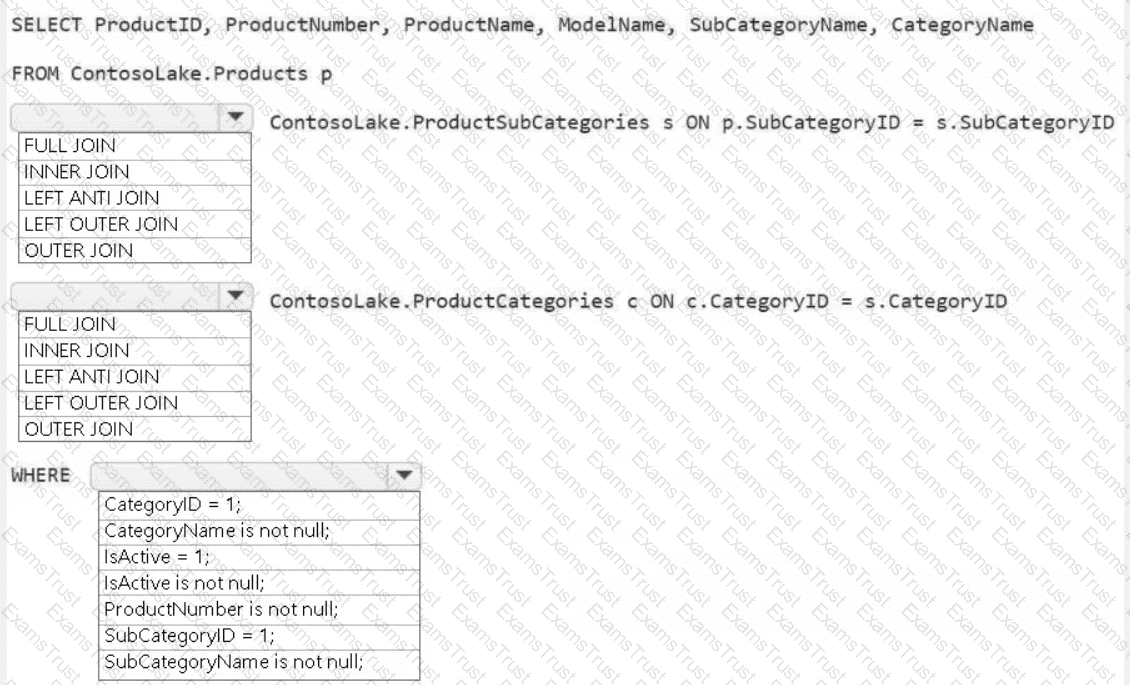

You need to create the product dimension.

How should you complete the Apache Spark SQL code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to ensure that WorkspaceA can be configured for source control. Which two actions should you perform?

Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.