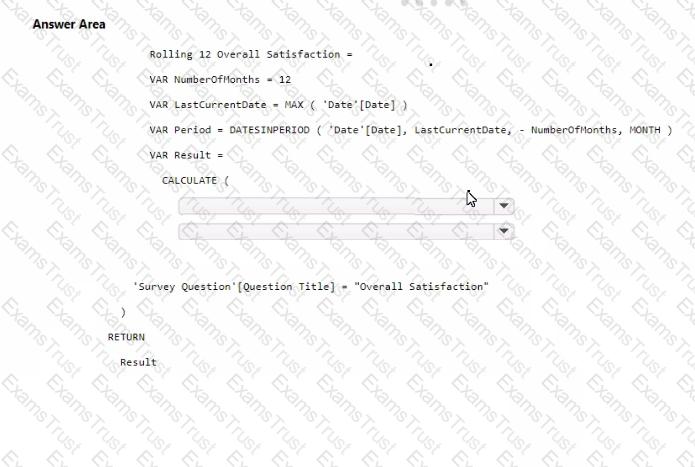

You need to create a DAX measure to calculate the average overall satisfaction score.

How should you complete the DAX code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

What should you recommend using to ingest the customer data into the data store in the AnatyticsPOC workspace?

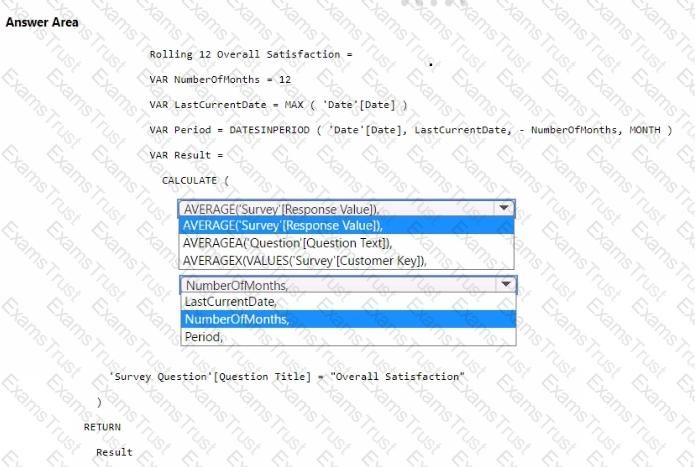

You need to design a semantic model for the customer satisfaction report.

Which data source authentication method and mode should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

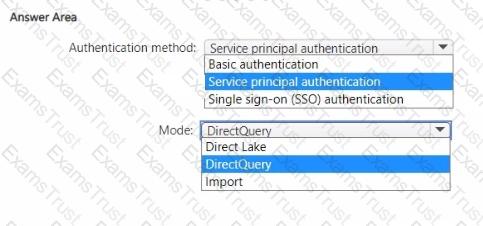

You to need assign permissions for the data store in the AnalyticsPOC workspace. The solution must meet the security requirements.

Which additional permissions should you assign when you share the data store? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to implement the date dimension in the data store. The solution must meet the technical requirements.

What are two ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

You need to recommend a solution to prepare the tenant for the PoC.

Which two actions should you recommend performing from the Fabric Admin portal? Each correct answer presents part of the solution.

NOTE: Each correct answer is worth one point.

You need to ensure the data loading activities in the AnalyticsPOC workspace are executed in the appropriate sequence. The solution must meet the technical requirements.

What should you do?

Which type of data store should you recommend in the AnalyticsPOC workspace?

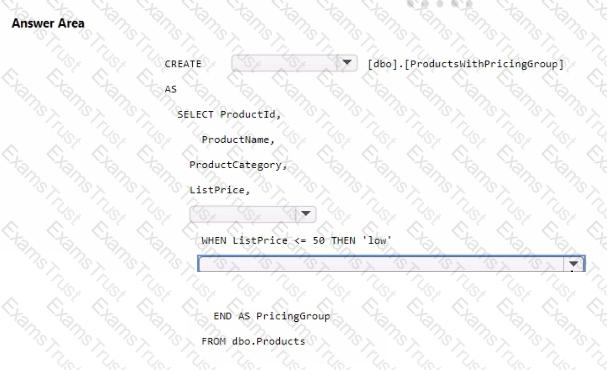

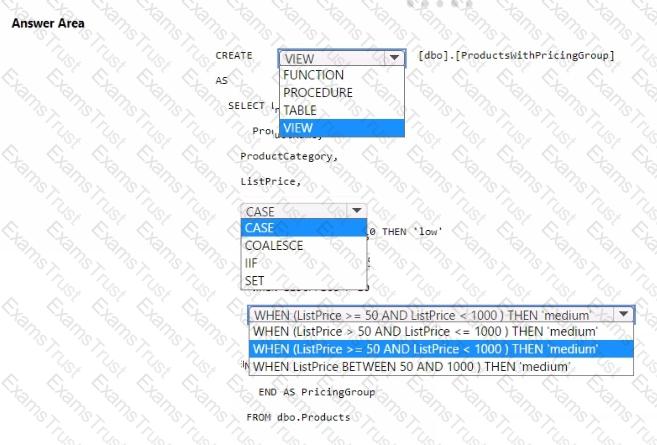

You need to resolve the issue with the pricing group classification.

How should you complete the T-SQL statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

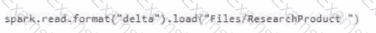

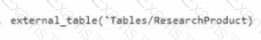

Which syntax should you use in a notebook to access the Research division data for Productlinel?

A)

B)

C)

D)

You need to ensure that Contoso can use version control to meet the data analytics requirements and the general requirements. What should you do?

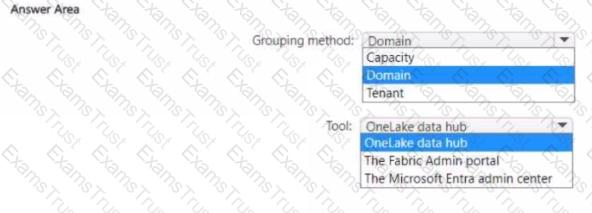

You need to recommend a solution to group the Research division workspaces.

What should you include in the recommendation? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to refresh the Orders table of the Online Sales department. The solution must meet the semantic model requirements. What should you include in the solution?

You need to recommend which type of fabric capacity SKU meets the data analytics requirements for the Research division. What should you recommend?

What should you use to implement calculation groups for the Research division semantic models?

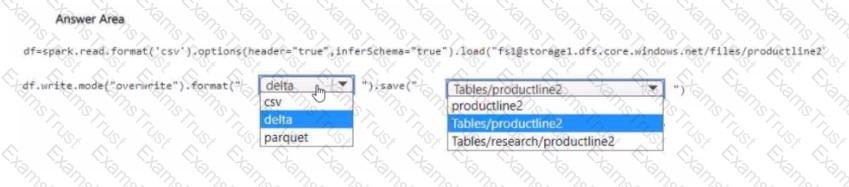

You need to migrate the Research division data for Productline2. The solution must meet the data preparation requirements. How should you complete the code? To answer, select the appropriate options in the answer area

NOTE: Each correct selection is worth one point.

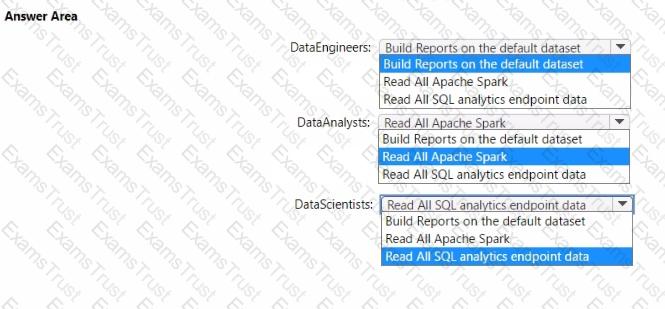

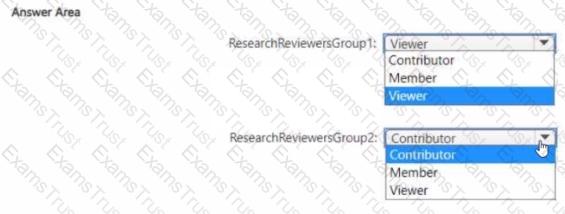

Which workspace rote assignments should you recommend for ResearchReviewersGroupl and ResearchReviewersGroupZ? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to provide Power Bl developers with access to the pipeline. The solution must meet the following requirements:

• Ensure that the developers can deploy items to the workspaces for Development and Test.

• Prevent the developers from deploying items to the workspace for Production.

• Follow the principle of least privilege.

Which three levels of access should you assign to the developers? Each correct answer presents part of the solution. NOTE: Each correct answer is worth one point.

You have a Fabric tenant that contains a semantic model.

You need to prevent report creators from populating visuals by using implicit measures.

What are two tools that you can use to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct answer is worth one point.

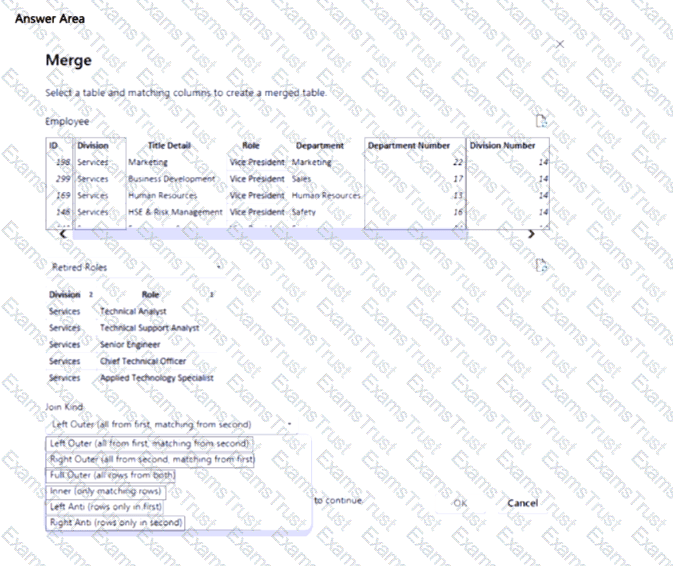

You have two Microsoft Power Bl queries named Employee and Retired Roles.

You need to merge the Employee query with the Retired Roles query. The solution must ensure that rows in the Employee query that match the Retired Roles query are removed.

Which column and Join Kind should you use in Power Query Editor? To answer, select the appropriate options in the answer area.

NOTE: Each correct answer is worth one point

You are the administrator of a Fabric workspace that contains a lakehouse named Lakehouse1. Lakehouse1 contains the following tables:

• Table1: A Delta table created by using a shortcut

• Table2: An external table created by using Spark

• Table3: A managed table

You plan to connect to Lakehouse1 by using its SQL endpoint. What will you be able to do after connecting to Lakehouse1?

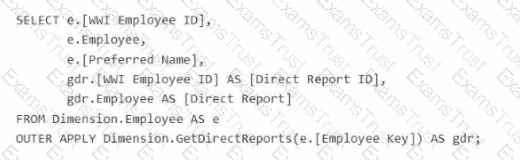

You have a Fabric tenant that contains a warehouse named WH1. You run the following T-SQL query against WH1.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

You have a semantic model named Model1 that contains data that relates to customers and their bank account balances.

Model1 has the following tables and columns.

A customer can have one or more accounts. Each account can be associated to multiple customers.

You need to ensure that users can query Model1 to identify the total transaction amounts by customer.

What should you add to Model1?

You have a Fabric workspace named Workspace1.

You need to create a semantic model named Model1 and publish Model1 to Workspace1. The solution must meet the following requirements:

Can revert to previous versions of Model1 as required.

Identifies differences between saved versions of Model1.

Uses Microsoft Power BI Desktop to publish to Workspace1.

Can edit item definition files by using Microsoft Visual Studio Code.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

You have a Fabric tenant that contains a warehouse. The warehouse uses row-level security (RLS). You create a Direct Lake semantic model that uses the Delta tables and RLS of the warehouse. When users interact with a report built from the model, which mode will be used by the DAX queries?

You have a Fabric workspace named Workspace1 that is assigned to a newly created Fabric capacity named Capacity1.

You create a semantic model named Model1 and deploy Model1 to Workspace1.

You need to publish changes to Model1 directly from Tabular Editor.

What should you do?

You have a Fabric tenant that contains a warehouse named Warehouse1. Warehouse1 contains three schemas named schemaA, schemaB. and schemaC

You need to ensure that a user named User1 can truncate tables in schemaA only.

How should you complete the T-SQL statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

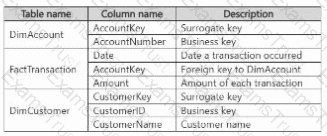

You have a Fabric workspace named Workspace1 that contains a data flow named Dataflow1. Dataflow1 contains a query that returns the data shown in the following exhibit.

You need to transform the date columns into attribute-value pairs, where columns become rows.

You select the VendorlD column.

Which transformation should you select from the context menu of the VendorlD column?

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

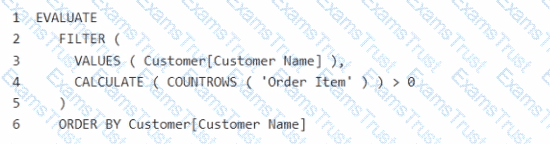

You have a Fabric tenant that contains a semantic model named Model1.

You discover that the following query performs slowly against Model1.

You need to reduce the execution time of the query.

Solution: You replace line 4 by using the following code:

Does this meet the goal?

You have a Fabric tenant that contains a workspace named Workspace1. Workspace1 contains a single semantic model that has two Microsoft Power BI reports.

You have a Microsoft 365 subscription that contains a data loss prevention (DLP) policy named DLP1.

You need to apply DLP1 to the items in Workspace1.

What should you do?

Note: This section contains one or more sets of questions with the same scenario and problem. Each question presents a unique solution to the problem. You must determine whether the solution meets the stated goals. More than one solution in the set might solve the problem. It is also possible that none of the solutions in the set solve the problem.

After you answer a question in this section, you will NOT be able to return. As a result, these questions do not appear on the Review Screen.

Your network contains an on-premises Active Directory Domain Services (AD DS) domain named contoso.com that syncs with a Microsoft Entra tenant by using Microsoft Entra Connect.

You have a Fabric tenant that contains a semantic model.

You enable dynamic row-level security (RLS) for the model and deploy the model to the Fabric service.

You query a measure that includes the username () function, and the query returns a blank result.

You need to ensure that the measure returns the user principal name (UPN) of a user.

Solution: You update the measure to use the USEROBJECT () function.

Does this meet the goal?

You have an Azure Data Lake Storage Gen2 account named storage! that contains a Parquet file named sales.parquet.

You have a Fabric tenant that contains a workspace named Workspace1.

Using a notebook in Workspace1, you need to load the content of the file to the default lakehouse. The solution must ensure that the content will display automatically as a table named Sales in Lakehouse explorer.

How should you complete the code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You have a Fabric tenant that contains a warehouse named Warehouse!. Warehousel contains two schemas name schemal and schema2 and a table named schemal.city.

You need to make a copy of schemal.city in schema2. The solution must minimize the copying of data.

Which T-SQL statement should you run?

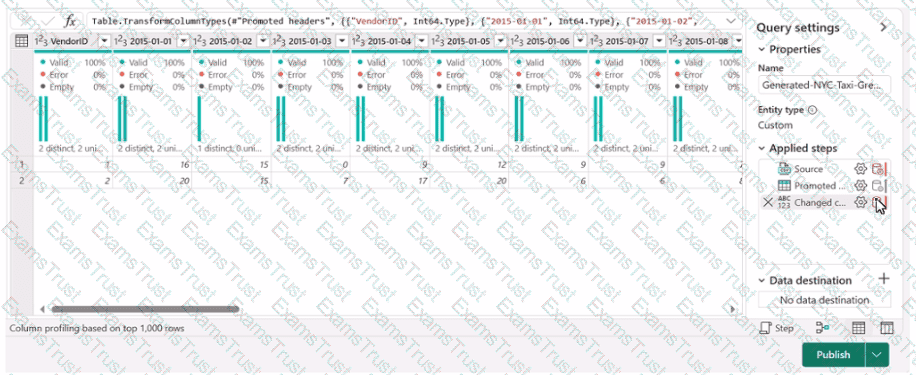

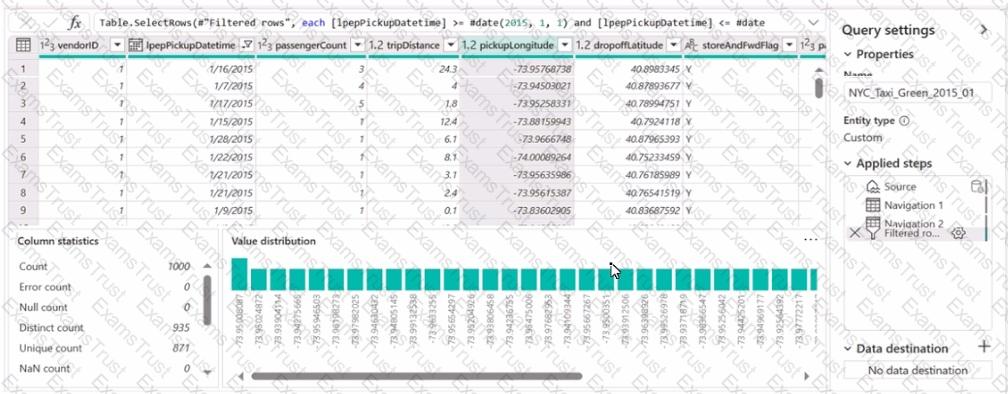

You have a Fabric workspace named Workspace 1 that contains a dataflow named Dataflow1. Dataflow1 has a query that returns 2.000 rows. You view the query in Power Query as shown in the following exhibit.

What can you identify about the pickupLongitude column?