Schedule a pod as follows:

Name: nginx-kusc00101

Image: nginx

Node selector: disk=ssd

List the nginx pod with custom columns POD_NAME and POD_STATUS

Print pod name and start time to “/opt/pod-status” file

Score: 4%

Task

Schedule a pod as follows:

• Name: nginx-kusc00401

• Image: nginx

• Node selector: disk=ssd

Set the node named ek8s-node-1 as unavailable and reschedule all the pods running on it.

Create a pod that echo “hello world” and then exists. Have the pod deleted automatically when it’s completed

Create a deployment as follows:

Name: nginx-random

Exposed via a service nginx-random

Ensure that the service and pod are accessible via their respective DNS records

The container(s) within any pod(s) running as a part of this deployment should use the nginx Image

Next, use the utility nslookup to look up the DNS records of the service and pod and write the output to /opt/KUNW00601/service.dns and /opt/KUNW00601/pod.dns respectively.

Create a pod with image nginx called nginx and allow traffic on port 80

Create a namespace called 'development' and a pod with image nginx called nginx on this namespace.

Score: 4%

Task

Create a pod named kucc8 with a single app container for each of the following images running inside (there may be between 1 and 4 images specified): nginx + redis + memcached .

Check to see how many worker nodes are ready (not including nodes tainted NoSchedule) and write the number to /opt/KUCC00104/kucc00104.txt.

Task

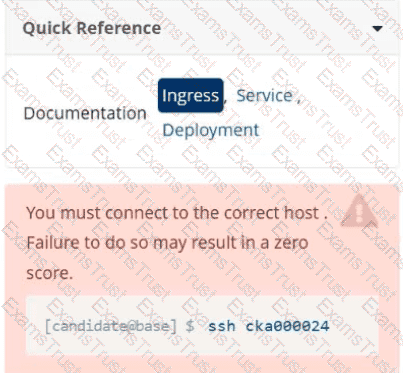

Create a new Ingress resource as follows:

. Name: echo

. Namespace : sound-repeater

. Exposing Service echoserver-service on

using Service port 8080

The availability of Service

echoserver-service can be checked

i

using the following command, which should return 200 :

[candidate@cka000024] $ curl -o /de v/null -s -w "%{http_code}\n"

List the nginx pod with custom columns POD_NAME and POD_STATUS

You must connect to the correct host.

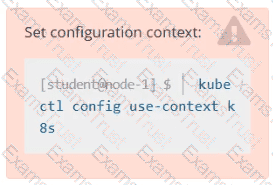

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000056

Task

Review and apply the appropriate NetworkPolicy from the provided YAML samples.

Ensure that the chosen NetworkPolicy is not overly permissive, but allows communication between the frontend and backend Deployments, which run in the frontend and backend namespaces respectively.

First, analyze the frontend and backend Deployments to determine the specific requirements for the NetworkPolicy that needs to be applied.

Next, examine the NetworkPolicy YAML samples located in the ~/netpol folder.

Failure to comply may result in a reduced score.

Do not delete or modify the provided samples. Only apply one of them.

Finally, apply the NetworkPolicy that enables communication between the frontend and backend Deployments, without being overly permissive.

Create a persistent volume with name app-data, of capacity 2Gi and access mode ReadWriteMany. The type of volume is hostPath and its location is /srv/app-data.

List all the pods showing name and namespace with a json path expression

Score: 4%

Context

You have been asked to create a new ClusterRole for a deployment pipeline and bind it to a specific ServiceAccount scoped to a specific namespace.

Task

Create a new ClusterRole named deployment-clusterrole, which only allows to create the following resource types:

• Deployment

• StatefulSet

• DaemonSet

Create a new ServiceAccount named cicd-token in the existing namespace app-team1.

Bind the new ClusterRole deployment-clusterrole lo the new ServiceAccount cicd-token , limited to the namespace app-team1.

Check the Image version of nginx-dev pod using jsonpath

Create a pod with environment variables as var1=value1.Check the environment variable in pod

Create a snapshot of the etcd instance running at saving the snapshot to the file path /srv/data/etcd-snapshot.db.

The following TLS certificates/key are supplied for connecting to the server with etcdctl:

CA certificate: /opt/KUCM00302/ca.crt

Client certificate: /opt/KUCM00302/etcd-client.crt

Client key: Topt/KUCM00302/etcd-client.key

Score:7%

Context

An existing Pod needs to be integrated into the Kubernetes built-in logging architecture (e. g. kubectl logs). Adding a streaming sidecar container is a good and common way to accomplish this requirement.

Task

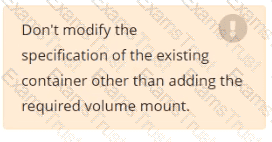

Add a sidecar container named sidecar, using the busybox Image, to the existing Pod big-corp-app. The new sidecar container has to run the following command:

/bin/sh -c tail -n+1 -f /va r/log/big-corp-app.log

Use a Volume, mounted at /var/log, to make the log file big-corp-app.log available to the sidecar container.

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000054

Context:

Your cluster 's CNI has failed a security audit. It has been removed. You must install a new CNI

that can enforce network policies.

Task

Install and set up a Container Network Interface (CNI ) that meets these requirements:

Pick and install one of these CNI options:

· Flannel version 0.26.1

Manifest:

· Calico version 3.28.2

Manifest:

calico/calico/v3.28.2/manifests/tigera-operator.yaml

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000047

Task

A MariaDB Deployment in the mariadb namespace has been deleted by mistake. Your task is to restore the Deployment ensuring data persistence. Follow these steps:

Create a PersistentVolumeClaim (PVC ) named mariadb in the mariadb namespace with the

following specifications:

Access mode ReadWriteOnce

Storage 250Mi

You must use the existing retained PersistentVolume (PV ).

Failure to do so will result in a reduced score.

There is only one existing PersistentVolume .

Edit the MariaDB Deployment file located at ~/mariadb-deployment.yaml to use PVC you

created in the previous step.

Apply the updated Deployment file to the cluster.

Ensure the MariaDB Deployment is running and stable.

Configure the kubelet systemd- managed service, on the node labelled with name=wk8s-node-1, to launch a pod containing a single container of Image httpd named webtool automatically. Any spec files required should be placed in the /etc/kubernetes/manifests directory on the node.

You can ssh to the appropriate node using:

[student@node-1] $ ssh wk8s-node-1

You can assume elevated privileges on the node with the following command:

[student@wk8s-node-1] $ | sudo –i