Which two producer exceptions are examples of the class RetriableException? (Select two.)

Clients that connect to a Kafka cluster are required to specify one or more brokers in the bootstrap.servers parameter.

What is the primary advantage of specifying more than one broker?

Which two producer exceptions are examples of the class RetriableException? (Select two.)

What are two examples of performance metrics?

(Select two.)

Which partition assignment minimizes partition movements between two assignments?

The producer code below features a Callback class with a method called onCompletion().

When will the onCompletion() method be invoked?

You are writing a producer application and need to ensure proper delivery. You configure the producer with acks=all.

Which two actions should you take to ensure proper error handling?

(Select two.)

Which configuration allows more time for the consumer poll to process records?

You are building a system for a retail store selling products to customers.

Which three datasets should you model as a GlobalKTable?

(Select three.)

Which statement is true about how exactly-once semantics (EOS) work in Kafka Streams?

You need to correctly join data from two Kafka topics.

Which two scenarios will allow for co-partitioning?

(Select two.)

Which two statements about Kafka Connect Single Message Transforms (SMTs) are correct?

(Select two.)

You have a topic t1 with six partitions. You use Kafka Connect to send data from topic t1 in your Kafka cluster to Amazon S3. Kafka Connect is configured for two tasks.

How many partitions will each task process?

You need to configure a sink connector to write records that fail into a dead letter queue topic. Requirements:

Topic name: DLQ-Topic

Headers containing error context must be added to the messagesWhich three configuration parameters are necessary?(Select three.)

Your configuration parameters for a Source connector and Connect worker are:

offset.flush.interval.ms=60000

offset.flush.timeout.ms=500

offset.storage.topic=connect-offsets

offset.storage.replication.factor=-1Which four statements match the expected behavior?(Select four.)

You create a producer that writes messages about bank account transactions from tens of thousands of different customers into a topic.

Your consumers must process these messages with low latency and minimize consumer lag

Processing takes ~6x longer than producing

Transactions for each bank account must be processedin orderWhich strategy should you use?

You are developing a Java application using a Kafka consumer.

You need to integrate Kafka’s client logs with your own application’s logs using log4j2.

Which Java library dependency must you include in your project?

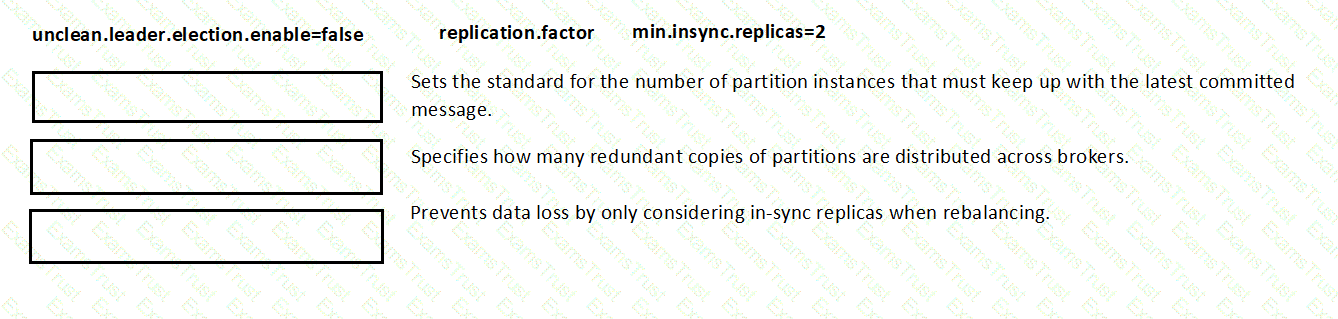

Match the topic configuration setting with the reason the setting affects topic durability.

(You are given settings like unclean.leader.election.enable=false, replication.factor, min.insync.replicas=2)